_id

string | id

string | author

string | baseModels

dict | downloads

int64 | downloads_all_time

int64 | gated

string | created_at

timestamp[us, tz=UTC] | last_modified

timestamp[us, tz=UTC] | library_name

string | likes

int64 | trending_score

float64 | model_index

string | pipeline_tag

string | safetensors

string | siblings

list | sizes

list | total_size

int64 | sha

string | tags

list | gguf

string | card

string | spaces

list | licenses

list | datasets

list | languages

list | safetensors_params

float64 | gguf_params

float64 | tasks

list | metrics

list | architectures

list | modalities

list | input_modalities

list | output_modalities

list | org_model

string | org_type

string | org_country

list | a_gated

string | a_baseModels

string | a_input_modalities

list | a_output_modalities

list | a_architectures

list | a_languages

list | a_training_methods

list | a_ddpa

string | annotator

int64 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

68ac69484a1f0871ddf555e4

|

microsoft/VibeVoice-1.5B

|

microsoft

| null | 87,188

| 87,188

|

False

| 2025-08-25T13:46:48Z

| 2025-08-28T04:57:59Z

| null | 1,117

| 1,117

| null |

text-to-speech

|

{"parameters": {"BF16": 2704021985}, "total": 2704021985}

|

[

".gitattributes",

"README.md",

"config.json",

"figures/Fig1.png",

"model-00001-of-00003.safetensors",

"model-00002-of-00003.safetensors",

"model-00003-of-00003.safetensors",

"model.safetensors.index.json",

"preprocessor_config.json"

] |

[

1603,

7273,

2762,

153971,

1975317828,

1983051688,

1449832938,

122616,

351

] | 5,408,491,030

|

cf42b8ff262f8a286bcbe580835cfaad62d277ca

|

[

"safetensors",

"vibevoice",

"Podcast",

"text-to-speech",

"en",

"zh",

"arxiv:2508.19205",

"arxiv:2412.08635",

"license:mit",

"region:us"

] | null |

## VibeVoice: A Frontier Open-Source Text-to-Speech Model

VibeVoice is a novel framework designed for generating expressive, long-form, multi-speaker conversational audio, such as podcasts, from text. It addresses significant challenges in traditional Text-to-Speech (TTS) systems, particularly in scalability, speaker consistency, and natural turn-taking.

A core innovation of VibeVoice is its use of continuous speech tokenizers (Acoustic and Semantic) operating at an ultra-low frame rate of 7.5 Hz. These tokenizers efficiently preserve audio fidelity while significantly boosting computational efficiency for processing long sequences. VibeVoice employs a next-token diffusion framework, leveraging a Large Language Model (LLM) to understand textual context and dialogue flow, and a diffusion head to generate high-fidelity acoustic details.

The model can synthesize speech up to **90 minutes** long with up to **4 distinct speakers**, surpassing the typical 1-2 speaker limits of many prior models.

➡️ **Technical Report:** [VibeVoice Technical Report](https://arxiv.org/abs/2508.19205)

➡️ **Project Page:** [microsoft/VibeVoice](https://microsoft.github.io/VibeVoice)

➡️ **Code:** [microsoft/VibeVoice-Code](https://github.com/microsoft/VibeVoice)

<p align="left">

<img src="figures/Fig1.png" alt="VibeVoice Overview" height="250px">

</p>

## Training Details

Transformer-based Large Language Model (LLM) integrated with specialized acoustic and semantic tokenizers and a diffusion-based decoding head.

- LLM: [Qwen2.5-1.5B](https://huggingface.co/Qwen/Qwen2.5-1.5B) for this release.

- Tokenizers:

- Acoustic Tokenizer: Based on a σ-VAE variant (proposed in [LatentLM](https://arxiv.org/pdf/2412.08635)), with a mirror-symmetric encoder-decoder structure featuring 7 stages of modified Transformer blocks. Achieves 3200x downsampling from 24kHz input. Encoder/decoder components are ~340M parameters each.

- Semantic Tokenizer: Encoder mirrors the Acoustic Tokenizer's architecture (without VAE components). Trained with an ASR proxy task.

- Diffusion Head: Lightweight module (4 layers, ~123M parameters) conditioned on LLM hidden states. Predicts acoustic VAE features using a Denoising Diffusion Probabilistic Models (DDPM) process. Uses Classifier-Free Guidance (CFG) and DPM-Solver (and variants) during inference.

- Context Length: Trained with a curriculum increasing up to 65,536 tokens.

- Training Stages:

- Tokenizer Pre-training: Acoustic and Semantic tokenizers are pre-trained separately.

- VibeVoice Training: Pre-trained tokenizers are frozen; only the LLM and diffusion head parameters are trained. A curriculum learning strategy is used for input sequence length (4k -> 16K -> 32K -> 64K). Text tokenizer not explicitly specified, but the LLM (Qwen2.5) typically uses its own. Audio is "tokenized" via the acoustic and semantic tokenizers.

## Models

| Model | Context Length | Generation Length | Weight |

|-------|----------------|----------|----------|

| VibeVoice-0.5B-Streaming | - | - | On the way |

| VibeVoice-1.5B | 64K | ~90 min | You are here. |

| VibeVoice-7B-Preview| 32K | ~45 min | [HF link](https://huggingface.co/WestZhang/VibeVoice-Large-pt) |

## Installation and Usage

Please refer to [GitHub README](https://github.com/microsoft/VibeVoice?tab=readme-ov-file#installation)

## Responsible Usage

### Direct intended uses

The VibeVoice model is limited to research purpose use exploring highly realistic audio dialogue generation detailed in the [tech report](https://github.com/microsoft/VibeVoice/blob/main/report/TechnicalReport.pdf).

### Out-of-scope uses

Use in any manner that violates applicable laws or regulations (including trade compliance laws). Use in any other way that is prohibited by MIT License. Use to generate any text transcript. Furthermore, this release is not intended or licensed for any of the following scenarios:

- Voice impersonation without explicit, recorded consent – cloning a real individual’s voice for satire, advertising, ransom, social‑engineering, or authentication bypass.

- Disinformation or impersonation – creating audio presented as genuine recordings of real people or events.

- Real‑time or low‑latency voice conversion – telephone or video‑conference “live deep‑fake” applications.

- Unsupported language – the model is trained only on English and Chinese data; outputs in other languages are unsupported and may be unintelligible or offensive.

- Generation of background ambience, Foley, or music – VibeVoice is speech‑only and will not produce coherent non‑speech audio.

## Risks and limitations

While efforts have been made to optimize it through various techniques, it may still produce outputs that are unexpected, biased, or inaccurate. VibeVoice inherits any biases, errors, or omissions produced by its base model (specifically, Qwen2.5 1.5b in this release).

Potential for Deepfakes and Disinformation: High-quality synthetic speech can be misused to create convincing fake audio content for impersonation, fraud, or spreading disinformation. Users must ensure transcripts are reliable, check content accuracy, and avoid using generated content in misleading ways. Users are expected to use the generated content and to deploy the models in a lawful manner, in full compliance with all applicable laws and regulations in the relevant jurisdictions. It is best practice to disclose the use of AI when sharing AI-generated content.

English and Chinese only: Transcripts in language other than English or Chinese may result in unexpected audio outputs.

Non-Speech Audio: The model focuses solely on speech synthesis and does not handle background noise, music, or other sound effects.

Overlapping Speech: The current model does not explicitly model or generate overlapping speech segments in conversations.

## Recommendations

We do not recommend using VibeVoice in commercial or real-world applications without further testing and development. This model is intended for research and development purposes only. Please use responsibly.

To mitigate the risks of misuse, we have:

Embedded an audible disclaimer (e.g. “This segment was generated by AI”) automatically into every synthesized audio file.

Added an imperceptible watermark to generated audio so third parties can verify VibeVoice provenance. Please see contact information at the end of this model card.

Logged inference requests (hashed) for abuse pattern detection and publishing aggregated statistics quarterly.

Users are responsible for sourcing their datasets legally and ethically. This may include securing appropriate rights and/or anonymizing data prior to use with VibeVoice. Users are reminded to be mindful of data privacy concerns.

## Contact

This project was conducted by members of Microsoft Research. We welcome feedback and collaboration from our audience. If you have suggestions, questions, or observe unexpected/offensive behavior in our technology, please contact us at [email protected].

If the team receives reports of undesired behavior or identifies issues independently, we will update this repository with appropriate mitigations.

|

[

"broadfield-dev/VibeVoice-demo",

"yasserrmd/VibeVoice",

"broadfield-dev/VibeVoice-demo-dev",

"akhaliq/VibeVoice-1.5B",

"mrfakename/VibeVoice-1.5B",

"NeuralFalcon/VibeVoice-Colab",

"thelip/VibeVoice",

"ReallyFloppyPenguin/VibeVoice-demo",

"Xenobd/VibeVoice-demo",

"Dorjzodovsuren/VibeVoice",

"umint/o4-mini",

"krishna-ag/ms-vibe-voice",

"Shubhvedi/Vibe-Voice-TTS",

"danhtran2mind/VibeVoice",

"SiddhJagani/Voice",

"pierreguillou/VibeVoice-demo",

"PunkTink/VibeVoice-mess",

"ginipick/VibeVoice-demo",

"umint/gpt-4.1-nano",

"umint/o3",

"jonathanagustin/vibevoice"

] |

[

"mit"

] | null |

[

"en",

"zh"

] | 2,704,021,985

| null |

[

"text-to-speech"

] | null |

[

"VibeVoiceForConditionalGeneration",

"vibevoice"

] |

[

"audio"

] |

[

"text"

] |

[

"audio"

] |

free

|

company

|

[

"United States of America",

"International",

"India",

"Belgium"

] | null | null | null | null | null | null | null | null | null |

68aaebfbfe684542cfc51e66

|

openbmb/MiniCPM-V-4_5

|

openbmb

| null | 9,706

| 9,706

|

False

| 2025-08-24T10:39:55Z

| 2025-08-31T14:57:14Z

|

transformers

| 747

| 747

| null |

image-text-to-text

|

{"parameters": {"BF16": 8695895280}, "total": 8695895280}

|

[

".gitattributes",

"README.md",

"added_tokens.json",

"config.json",

"configuration_minicpm.py",

"generation_config.json",

"image_processing_minicpmv.py",

"merges.txt",

"model-00001-of-00004.safetensors",

"model-00002-of-00004.safetensors",

"model-00003-of-00004.safetensors",

"model-00004-of-00004.safetensors",

"model.safetensors.index.json",

"modeling_minicpmv.py",

"modeling_navit_siglip.py",

"preprocessor_config.json",

"processing_minicpmv.py",

"resampler.py",

"special_tokens_map.json",

"tokenization_minicpmv_fast.py",

"tokenizer.json",

"tokenizer_config.json",

"vocab.json"

] |

[

1570,

24775,

2862,

1461,

3288,

268,

20757,

1671853,

5286612176,

5301855088,

4546851120,

2256571800,

72172,

17754,

41835,

714,

11026,

11732,

12103,

1647,

11437868,

25786,

2776833

] | 17,408,026,488

|

17353d11601386fac6cca5a541e84b85928bd4ae

|

[

"transformers",

"safetensors",

"minicpmv",

"feature-extraction",

"minicpm-v",

"vision",

"ocr",

"multi-image",

"video",

"custom_code",

"image-text-to-text",

"conversational",

"multilingual",

"dataset:openbmb/RLAIF-V-Dataset",

"arxiv:2403.11703",

"region:us"

] | null |

<h1>A GPT-4o Level MLLM for Single Image, Multi Image and High-FPS Video Understanding on Your Phone</h1>

[GitHub](https://github.com/OpenBMB/MiniCPM-o) | [Demo](http://101.126.42.235:30910/)</a>

## MiniCPM-V 4.5

**MiniCPM-V 4.5** is the latest and most capable model in the MiniCPM-V series. The model is built on Qwen3-8B and SigLIP2-400M with a total of 8B parameters. It exhibits a significant performance improvement over previous MiniCPM-V and MiniCPM-o models, and introduces new useful features. Notable features of MiniCPM-V 4.5 include:

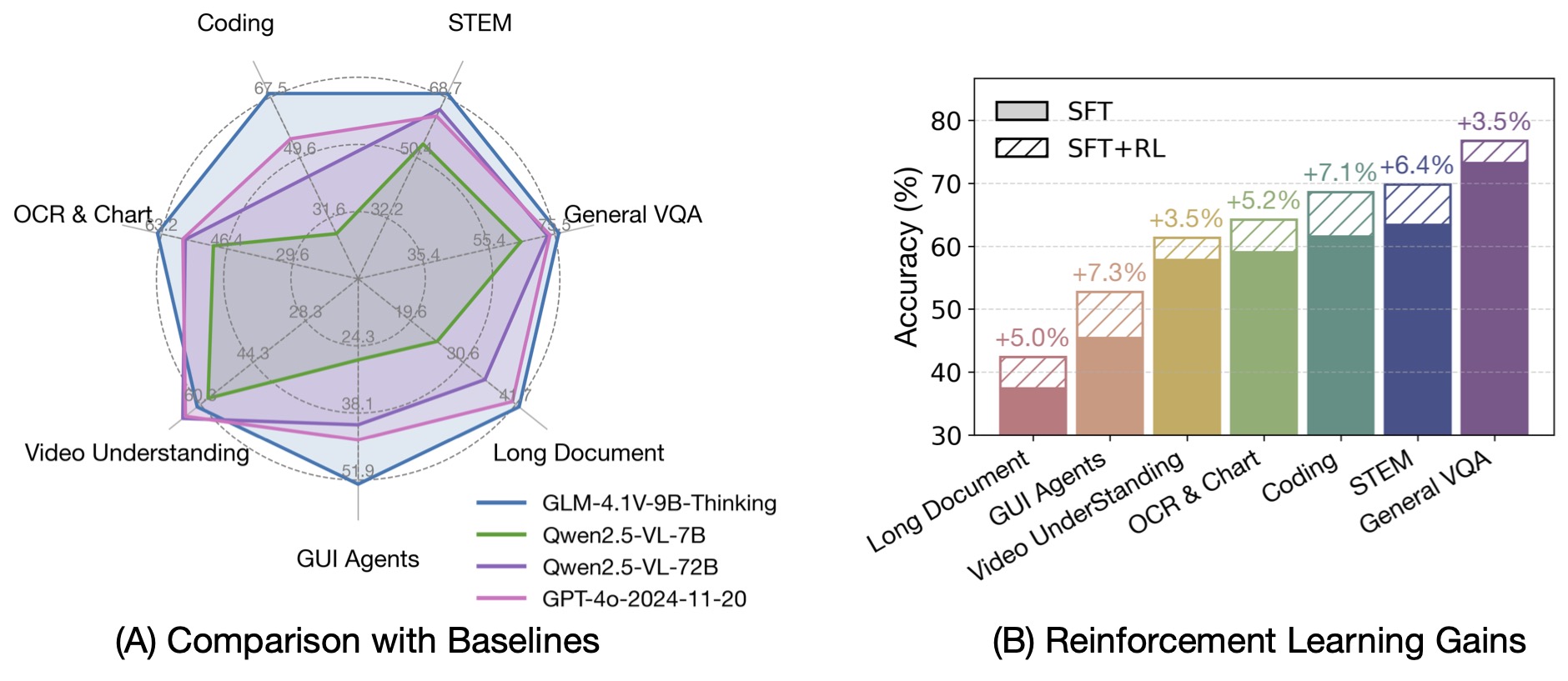

- 🔥 **State-of-the-art Vision-Language Capability.**

MiniCPM-V 4.5 achieves an average score of 77.0 on OpenCompass, a comprehensive evaluation of 8 popular benchmarks. **With only 8B parameters, it surpasses widely used proprietary models like GPT-4o-latest, Gemini-2.0 Pro, and strong open-source models like Qwen2.5-VL 72B** for vision-language capabilities, making it the most performant MLLM under 30B parameters.

- 🎬 **Efficient High-FPS and Long Video Understanding.** Powered by a new unified 3D-Resampler over images and videos, MiniCPM-V 4.5 can now achieve 96x compression rate for video tokens, where 6 448x448 video frames can be jointly compressed into 64 video tokens (normally 1,536 tokens for most MLLMs). This means that the model can perceive significantly more video frames without increasing the LLM inference cost. This brings state-of-the-art high-FPS (up to 10FPS) video understanding and long video understanding capabilities on Video-MME, LVBench, MLVU, MotionBench, FavorBench, etc., efficiently.

- ⚙️ **Controllable Hybrid Fast/Deep Thinking.** MiniCPM-V 4.5 supports both fast thinking for efficient frequent usage with competitive performance, and deep thinking for more complex problem solving. To cover efficiency and performance trade-offs in different user scenarios, this fast/deep thinking mode can be switched in a highly controlled fashion.

- 💪 **Strong OCR, Document Parsing and Others.**

Based on [LLaVA-UHD](https://arxiv.org/pdf/2403.11703) architecture, MiniCPM-V 4.5 can process high-resolution images with any aspect ratio and up to 1.8 million pixels (e.g., 1344x1344), using 4x less visual tokens than most MLLMs. The model achieves **leading performance on OCRBench, surpassing proprietary models such as GPT-4o-latest and Gemini 2.5**. It also achieves state-of-the-art performance for PDF document parsing capability on OmniDocBench among general MLLMs. Based on the latest [RLAIF-V](https://github.com/RLHF-V/RLAIF-V/) and [VisCPM](https://github.com/OpenBMB/VisCPM) techniques, it features **trustworthy behaviors**, outperforming GPT-4o-latest on MMHal-Bench, and supports **multilingual capabilities** in more than 30 languages.

- 💫 **Easy Usage.**

MiniCPM-V 4.5 can be easily used in various ways: (1) [llama.cpp](https://github.com/tc-mb/llama.cpp/blob/Support-MiniCPM-V-4.5/docs/multimodal/minicpmv4.5.md) and [ollama](https://github.com/tc-mb/ollama/tree/MIniCPM-V) support for efficient CPU inference on local devices, (2) [int4](https://huggingface.co/openbmb/MiniCPM-V-4_5-int4), [GGUF](https://huggingface.co/openbmb/MiniCPM-V-4_5-gguf) and [AWQ](https://github.com/tc-mb/AutoAWQ) format quantized models in 16 sizes, (3) [SGLang](https://github.com/tc-mb/sglang/tree/main) and [vLLM](#efficient-inference-with-llamacpp-ollama-vllm) support for high-throughput and memory-efficient inference, (4) fine-tuning on new domains and tasks with [Transformers](https://github.com/tc-mb/transformers/tree/main) and [LLaMA-Factory](./docs/llamafactory_train_and_infer.md), (5) quick [local WebUI demo](#chat-with-our-demo-on-gradio), (6) optimized [local iOS app](https://github.com/tc-mb/MiniCPM-o-demo-iOS) on iPhone and iPad, and (7) online web demo on [server](http://101.126.42.235:30910/). See our [Cookbook](https://github.com/OpenSQZ/MiniCPM-V-CookBook) for full usages!

### Key Techniques

<div align="center">

<img src="https://raw.githubusercontent.com/openbmb/MiniCPM-o/main/assets/minicpm-v-4dot5-framework.png" , width=100%>

</div>

- **Architechture: Unified 3D-Resampler for High-density Video Compression.** MiniCPM-V 4.5 introduces a 3D-Resampler that overcomes the performance-efficiency trade-off in video understanding. By grouping and jointly compressing up to 6 consecutive video frames into just 64 tokens (the same token count used for a single image in MiniCPM-V series), MiniCPM-V 4.5 achieves a 96× compression rate for video tokens. This allows the model to process more video frames without additional LLM computational cost, enabling high-FPS video and long video understanding. The architecture supports unified encoding for images, multi-image inputs, and videos, ensuring seamless capability and knowledge transfer.

- **Pre-training: Unified Learning for OCR and Knowledge from Documents.** Existing MLLMs learn OCR capability and knowledge from documents in isolated training approaches. We observe that the essential difference between these two training approaches is the visibility of the text in images. By dynamically corrupting text regions in documents with varying noise levels and asking the model to reconstruct the text, the model learns to adaptively and properly switch between accurate text recognition (when text is visible) and multimodal context-based knowledge reasoning (when text is heavily obscured). This eliminates reliance on error-prone document parsers in knowledge learning from documents, and prevents hallucinations from over-augmented OCR data, resulting in top-tier OCR and multimodal knowledge performance with minimal engineering overhead.

- **Post-training: Hybrid Fast/Deep Thinking with Multimodal RL.** MiniCPM-V 4.5 offers a balanced reasoning experience through two switchable modes: fast thinking for efficient daily use and deep thinking for complex tasks. Using a new hybrid reinforcement learning method, the model jointly optimizes both modes, significantly enhancing fast-mode performance without compromising deep-mode capability. Incorporated with [RLPR](https://github.com/OpenBMB/RLPR) and [RLAIF-V](https://github.com/RLHF-V/RLAIF-V), it generalizes robust reasoning skills from broad multimodal data while effectively reducing hallucinations.

### Evaluation

<div align="center">

<img src="https://raw.githubusercontent.com/openbmb/MiniCPM-o/main/assets/radar_minicpm_v45.png", width=60%>

</div>

<div align="center">

<img src="https://raw.githubusercontent.com/openbmb/MiniCPM-o/main/assets/minicpmv_4_5_evaluation_result.png" , width=100%>

</div>

### Inference Efficiency

**OpenCompass**

<div align="left">

<table style="margin: 0px auto;">

<thead>

<tr>

<th align="left">Model</th>

<th>Size</th>

<th>Avg Score ↑</th>

<th>Total Inference Time ↓</th>

</tr>

</thead>

<tbody align="center">

<tr>

<td nowrap="nowrap" align="left">GLM-4.1V-9B-Thinking</td>

<td>10.3B</td>

<td>76.6</td>

<td>17.5h</td>

</tr>

<tr>

<td nowrap="nowrap" align="left">MiMo-VL-7B-RL</td>

<td>8.3B</td>

<td>76.4</td>

<td>11h</td>

</tr>

<tr>

<td nowrap="nowrap" align="left">MiniCPM-V 4.5</td>

<td>8.7B</td>

<td><b>77.0</td>

<td><b>7.5h</td>

</tr>

</tbody>

</table>

</div>

**Video-MME**

<div align="left">

<table style="margin: 0px auto;">

<thead>

<tr>

<th align="left">Model</th>

<th>Size</th>

<th>Avg Score ↑</th>

<th>Total Inference Time ↓</th>

<th>GPU Mem ↓</th>

</tr>

</thead>

<tbody align="center">

<tr>

<td nowrap="nowrap" align="left">Qwen2.5-VL-7B-Instruct</td>

<td>8.3B</td>

<td>71.6</td>

<td>3h</td>

<td>60G</td>

</tr>

<tr>

<td nowrap="nowrap" align="left">GLM-4.1V-9B-Thinking</td>

<td>10.3B</td>

<td><b>73.6</td>

<td>2.63h</td>

<td>32G</td>

</tr>

<tr>

<td nowrap="nowrap" align="left">MiniCPM-V 4.5</td>

<td>8.7B</td>

<td>73.5</td>

<td><b>0.26h</td>

<td><b>28G</td>

</tr>

</tbody>

</table>

</div>

Both Video-MME and OpenCompass were evaluated using 8×A100 GPUs for inference. The reported inference time of Video-MME includes full model-side computation, and excludes the external cost of video frame extraction (dependent on specific frame extraction tools) for fair comparison.

### Examples

<div align="center">

<a href="https://www.youtube.com/watch?v=Cn23FujYMMU"><img src="https://raw.githubusercontent.com/openbmb/MiniCPM-o/main/assets/minicpmv4_5/MiniCPM-V%204.5-8.26_img.jpeg", width=70%></a>

</div>

<div style="display: flex; flex-direction: column; align-items: center;">

<img src="https://raw.githubusercontent.com/openbmb/MiniCPM-o/main/assets/minicpmv4_5/en_case1.png" alt="en_case1" style="margin-bottom: 5px;">

<img src="https://raw.githubusercontent.com/openbmb/MiniCPM-o/main/assets/minicpmv4_5/en_case2.png" alt="en_case2" style="margin-bottom: 5px;">

<img src="https://raw.githubusercontent.com/openbmb/MiniCPM-o/main/assets/minicpmv4_5/en_case3.jpeg" alt="en_case3" style="margin-bottom: 5px;">

</div>

We deploy MiniCPM-V 4.5 on iPad M4 with [iOS demo](https://github.com/tc-mb/MiniCPM-o-demo-iOS). The demo video is the raw screen recording without editing.

<div align="center">

<img src="https://raw.githubusercontent.com/openbmb/MiniCPM-o/main/assets/minicpmv4_5/v45_en_handwriting.gif" width="45%" style="display: inline-block; margin: 0 10px;"/>

<img src="https://raw.githubusercontent.com/openbmb/MiniCPM-o/main/assets/minicpmv4_5/v45_en_cot.gif" width="45%" style="display: inline-block; margin: 0 10px;"/>

</div>

<div align="center">

<img src="https://raw.githubusercontent.com/openbmb/MiniCPM-o/main/assets/minicpmv4_5/v45_cn_handwriting.gif" width="45%" style="display: inline-block; margin: 0 10px;"/>

<img src="https://raw.githubusercontent.com/openbmb/MiniCPM-o/main/assets/minicpmv4_5/v45_cn_travel.gif" width="45%" style="display: inline-block; margin: 0 10px;"/>

</div>

## Usage

If you wish to enable thinking mode, provide the argument `enable_thinking=True` to the chat function.

#### Chat with Image

```python

import torch

from PIL import Image

from transformers import AutoModel, AutoTokenizer

torch.manual_seed(100)

model = AutoModel.from_pretrained('openbmb/MiniCPM-V-4_5', trust_remote_code=True, # or openbmb/MiniCPM-o-2_6

attn_implementation='sdpa', torch_dtype=torch.bfloat16) # sdpa or flash_attention_2, no eager

model = model.eval().cuda()

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V-4_5', trust_remote_code=True) # or openbmb/MiniCPM-o-2_6

image = Image.open('./assets/minicpmo2_6/show_demo.jpg').convert('RGB')

enable_thinking=False # If `enable_thinking=True`, the thinking mode is enabled.

stream=True # If `stream=True`, the answer is string

# First round chat

question = "What is the landform in the picture?"

msgs = [{'role': 'user', 'content': [image, question]}]

answer = model.chat(

msgs=msgs,

tokenizer=tokenizer,

enable_thinking=enable_thinking,

stream=True

)

generated_text = ""

for new_text in answer:

generated_text += new_text

print(new_text, flush=True, end='')

# Second round chat, pass history context of multi-turn conversation

msgs.append({"role": "assistant", "content": [answer]})

msgs.append({"role": "user", "content": ["What should I pay attention to when traveling here?"]})

answer = model.chat(

msgs=msgs,

tokenizer=tokenizer,

stream=True

)

generated_text = ""

for new_text in answer:

generated_text += new_text

print(new_text, flush=True, end='')

```

You will get the following output:

```shell

# round1

The landform in the picture is karst topography. Karst landscapes are characterized by distinctive, jagged limestone hills or mountains with steep, irregular peaks and deep valleys—exactly what you see here These unique formations result from the dissolution of soluble rocks like limestone over millions of years through water erosion.

This scene closely resembles the famous karst landscape of Guilin and Yangshuo in China’s Guangxi Province. The area features dramatic, pointed limestone peaks rising dramatically above serene rivers and lush green forests, creating a breathtaking and iconic natural beauty that attracts millions of visitors each year for its picturesque views.

# round2

When traveling to a karst landscape like this, here are some important tips:

1. Wear comfortable shoes: The terrain can be uneven and hilly.

2. Bring water and snacks for energy during hikes or boat rides.

3. Protect yourself from the sun with sunscreen, hats, and sunglasses—especially since you’ll likely spend time outdoors exploring scenic spots.

4. Respect local customs and nature regulations by not littering or disturbing wildlife.

By following these guidelines, you'll have a safe and enjoyable trip while appreciating the stunning natural beauty of places such as Guilin’s karst mountains.

```

#### Chat with Video

```python

## The 3d-resampler compresses multiple frames into 64 tokens by introducing temporal_ids.

# To achieve this, you need to organize your video data into two corresponding sequences:

# frames: List[Image]

# temporal_ids: List[List[Int]].

import torch

from PIL import Image

from transformers import AutoModel, AutoTokenizer

from decord import VideoReader, cpu # pip install decord

from scipy.spatial import cKDTree

import numpy as np

import math

model = AutoModel.from_pretrained('openbmb/MiniCPM-V-4_5', trust_remote_code=True, # or openbmb/MiniCPM-o-2_6

attn_implementation='sdpa', torch_dtype=torch.bfloat16) # sdpa or flash_attention_2, no eager

model = model.eval().cuda()

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V-4_5', trust_remote_code=True) # or openbmb/MiniCPM-o-2_6

MAX_NUM_FRAMES=180 # Indicates the maximum number of frames received after the videos are packed. The actual maximum number of valid frames is MAX_NUM_FRAMES * MAX_NUM_PACKING.

MAX_NUM_PACKING=3 # indicates the maximum packing number of video frames. valid range: 1-6

TIME_SCALE = 0.1

def map_to_nearest_scale(values, scale):

tree = cKDTree(np.asarray(scale)[:, None])

_, indices = tree.query(np.asarray(values)[:, None])

return np.asarray(scale)[indices]

def group_array(arr, size):

return [arr[i:i+size] for i in range(0, len(arr), size)]

def encode_video(video_path, choose_fps=3, force_packing=None):

def uniform_sample(l, n):

gap = len(l) / n

idxs = [int(i * gap + gap / 2) for i in range(n)]

return [l[i] for i in idxs]

vr = VideoReader(video_path, ctx=cpu(0))

fps = vr.get_avg_fps()

video_duration = len(vr) / fps

if choose_fps * int(video_duration) <= MAX_NUM_FRAMES:

packing_nums = 1

choose_frames = round(min(choose_fps, round(fps)) * min(MAX_NUM_FRAMES, video_duration))

else:

packing_nums = math.ceil(video_duration * choose_fps / MAX_NUM_FRAMES)

if packing_nums <= MAX_NUM_PACKING:

choose_frames = round(video_duration * choose_fps)

else:

choose_frames = round(MAX_NUM_FRAMES * MAX_NUM_PACKING)

packing_nums = MAX_NUM_PACKING

frame_idx = [i for i in range(0, len(vr))]

frame_idx = np.array(uniform_sample(frame_idx, choose_frames))

if force_packing:

packing_nums = min(force_packing, MAX_NUM_PACKING)

print(video_path, ' duration:', video_duration)

print(f'get video frames={len(frame_idx)}, packing_nums={packing_nums}')

frames = vr.get_batch(frame_idx).asnumpy()

frame_idx_ts = frame_idx / fps

scale = np.arange(0, video_duration, TIME_SCALE)

frame_ts_id = map_to_nearest_scale(frame_idx_ts, scale) / TIME_SCALE

frame_ts_id = frame_ts_id.astype(np.int32)

assert len(frames) == len(frame_ts_id)

frames = [Image.fromarray(v.astype('uint8')).convert('RGB') for v in frames]

frame_ts_id_group = group_array(frame_ts_id, packing_nums)

return frames, frame_ts_id_group

video_path="video_test.mp4"

fps = 5 # fps for video

force_packing = None # You can set force_packing to ensure that 3D packing is forcibly enabled; otherwise, encode_video will dynamically set the packing quantity based on the duration.

frames, frame_ts_id_group = encode_video(video_path, fps, force_packing=force_packing)

question = "Describe the video"

msgs = [

{'role': 'user', 'content': frames + [question]},

]

answer = model.chat(

msgs=msgs,

tokenizer=tokenizer,

use_image_id=False,

max_slice_nums=1,

temporal_ids=frame_ts_id_group

)

print(answer)

```

#### Chat with multiple images

<details>

<summary> Click to show Python code running MiniCPM-V 4.5 with multiple images input. </summary>

```python

import torch

from PIL import Image

from transformers import AutoModel, AutoTokenizer

model = AutoModel.from_pretrained('openbmb/MiniCPM-V-4_5', trust_remote_code=True,

attn_implementation='sdpa', torch_dtype=torch.bfloat16) # sdpa or flash_attention_2

model = model.eval().cuda()

tokenizer = AutoTokenizer.from_pretrained('openbmb/MiniCPM-V-4_5', trust_remote_code=True)

image1 = Image.open('image1.jpg').convert('RGB')

image2 = Image.open('image2.jpg').convert('RGB')

question = 'Compare image 1 and image 2, tell me about the differences between image 1 and image 2.'

msgs = [{'role': 'user', 'content': [image1, image2, question]}]

answer = model.chat(

image=None,

msgs=msgs,

tokenizer=tokenizer

)

print(answer)

```

</details>

## License

#### Model License

* The code in this repo is released under the [Apache-2.0](https://github.com/OpenBMB/MiniCPM/blob/main/LICENSE) License.

* The usage of MiniCPM-V series model weights must strictly follow [MiniCPM Model License.md](https://github.com/OpenBMB/MiniCPM-o/blob/main/MiniCPM%20Model%20License.md).

* The models and weights of MiniCPM are completely free for academic research. After filling out a ["questionnaire"](https://modelbest.feishu.cn/share/base/form/shrcnpV5ZT9EJ6xYjh3Kx0J6v8g) for registration, MiniCPM-V 4.5 weights are also available for free commercial use.

#### Statement

* As an LMM, MiniCPM-V 4.5 generates contents by learning a large amount of multimodal corpora, but it cannot comprehend, express personal opinions or make value judgement. Anything generated by MiniCPM-V 4.5 does not represent the views and positions of the model developers

* We will not be liable for any problems arising from the use of the MinCPM-V models, including but not limited to data security issues, risk of public opinion, or any risks and problems arising from the misdirection, misuse, dissemination or misuse of the model.

## Key Techniques and Other Multimodal Projects

👏 Welcome to explore key techniques of MiniCPM-V 4.5 and other multimodal projects of our team:

[VisCPM](https://github.com/OpenBMB/VisCPM/tree/main) | [RLPR](https://github.com/OpenBMB/RLPR) | [RLHF-V](https://github.com/RLHF-V/RLHF-V) | [LLaVA-UHD](https://github.com/thunlp/LLaVA-UHD) | [RLAIF-V](https://github.com/RLHF-V/RLAIF-V)

## Citation

If you find our work helpful, please consider citing our papers 📝 and liking this project ❤️!

```bib

@article{yao2024minicpm,

title={MiniCPM-V: A GPT-4V Level MLLM on Your Phone},

author={Yao, Yuan and Yu, Tianyu and Zhang, Ao and Wang, Chongyi and Cui, Junbo and Zhu, Hongji and Cai, Tianchi and Li, Haoyu and Zhao, Weilin and He, Zhihui and others},

journal={Nat Commun 16, 5509 (2025)},

year={2025}

}

```

|

[

"akhaliq/MiniCPM-V-4_5",

"orrzxz/MiniCPM-V-4_5",

"WYC-2025/MiniCPM-V-4_5",

"CGQN/MiniCPM-V-4_5",

"CGQN/MiniCPM-V-4_5-from_gpt5",

"CGQN/MiniCPM-V-4_5-CPU-0"

] | null |

[

"openbmb/RLAIF-V-Dataset"

] |

[

"multilingual"

] | 8,695,895,280

| null |

[

"feature-extraction",

"image-text-to-text"

] | null |

[

"modeling_minicpmv.MiniCPMV",

"MiniCPMV",

"AutoModel",

"minicpmv"

] |

[

"multimodal"

] |

[

"text",

"image"

] |

[

"embeddings",

"text"

] |

free

|

community

|

[

"China"

] | null | null | null | null | null | null | null | null | null |

68a8de283195d5730fd2c5b8

|

xai-org/grok-2

|

xai-org

| null | 4,047

| 4,047

|

False

| 2025-08-22T21:16:24Z

| 2025-08-24T00:59:56Z

| null | 879

| 485

| null | null | null |

[

".gitattributes",

"LICENSE",

"README.md",

"config.json",

"pytorch_model-00000-TP-common.safetensors",

"pytorch_model-00001-TP-common.safetensors",

"pytorch_model-00002-TP-common.safetensors",

"pytorch_model-00003-TP-common.safetensors",

"pytorch_model-00004-TP-common.safetensors",

"pytorch_model-00005-TP-common.safetensors",

"pytorch_model-00006-TP-000.safetensors",

"pytorch_model-00006-TP-001.safetensors",

"pytorch_model-00006-TP-002.safetensors",

"pytorch_model-00006-TP-003.safetensors",

"pytorch_model-00006-TP-004.safetensors",

"pytorch_model-00006-TP-005.safetensors",

"pytorch_model-00006-TP-006.safetensors",

"pytorch_model-00006-TP-007.safetensors",

"pytorch_model-00007-TP-000.safetensors",

"pytorch_model-00007-TP-001.safetensors",

"pytorch_model-00007-TP-002.safetensors",

"pytorch_model-00007-TP-003.safetensors",

"pytorch_model-00007-TP-004.safetensors",

"pytorch_model-00007-TP-005.safetensors",

"pytorch_model-00007-TP-006.safetensors",

"pytorch_model-00007-TP-007.safetensors",

"pytorch_model-00008-TP-000.safetensors",

"pytorch_model-00008-TP-001.safetensors",

"pytorch_model-00008-TP-002.safetensors",

"pytorch_model-00008-TP-003.safetensors",

"pytorch_model-00008-TP-004.safetensors",

"pytorch_model-00008-TP-005.safetensors",

"pytorch_model-00008-TP-006.safetensors",

"pytorch_model-00008-TP-007.safetensors",

"pytorch_model-00009-TP-common.safetensors",

"pytorch_model-00010-TP-common.safetensors",

"pytorch_model-00011-TP-common.safetensors",

"pytorch_model-00012-TP-common.safetensors",

"pytorch_model-00013-TP-common.safetensors",

"pytorch_model-00014-TP-common.safetensors",

"pytorch_model-00015-TP-common.safetensors",

"pytorch_model-00016-TP-common.safetensors",

"pytorch_model-00017-TP-common.safetensors",

"tokenizer.tok.json"

] |

[

1519,

5362,

1583,

947,

2147483760,

2147483744,

16472,

34359745872,

34359745872,

34359745744,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

17179936544,

1073749240,

8589942120,

8589942120,

1073749240,

1055096,

1055160,

1055032,

1055096,

8395888,

7724637

] | 539,040,431,560

|

d60cbe267db8bb43be676bc80e200c64268ea8ec

|

[

"git",

"region:us"

] | null |

# Grok 2

This repository contains the weights of Grok 2, a model trained and used at xAI in 2024.

## Usage: Serving with SGLang

- Download the weights. You can replace `/local/grok-2` with any other folder name you prefer.

```

hf download xai-org/grok-2 --local-dir /local/grok-2

```

You might encounter some errors during the download. Please retry until the download is successful.

If the download succeeds, the folder should contain **42 files** and be approximately 500 GB.

- Launch a server.

Install the latest SGLang inference engine (>= v0.5.1) from https://github.com/sgl-project/sglang/

Use the command below to launch an inference server. This checkpoint is TP=8, so you will need 8 GPUs (each with > 40GB of memory).

```

python3 -m sglang.launch_server --model /local/grok-2 --tokenizer-path /local/grok-2/tokenizer.tok.json --tp 8 --quantization fp8 --attention-backend triton

```

- Send a request.

This is a post-trained model, so please use the correct [chat template](https://github.com/sgl-project/sglang/blob/97a38ee85ba62e268bde6388f1bf8edfe2ca9d76/python/sglang/srt/tokenizer/tiktoken_tokenizer.py#L106).

```

python3 -m sglang.test.send_one --prompt "Human: What is your name?<|separator|>\n\nAssistant:"

```

You should be able to see the model output its name, Grok.

Learn more about other ways to send requests [here](https://docs.sglang.ai/basic_usage/send_request.html).

## License

The weights are licensed under the [Grok 2 Community License Agreement](https://huggingface.co/xai-org/grok-2/blob/main/LICENSE).

|

[

"umint/o4-mini",

"AnilNiraula/FinChat",

"umint/gpt-4.1-nano",

"umint/o3"

] | null | null | null | null | null | null | null |

[

"Grok1ForCausalLM",

"git"

] | null | null | null |

team

|

company

|

[

"United States of America"

] | null | null | null | null | null | null | null | null | null |

68a19381db43c983deb63fa5

|

Qwen/Qwen-Image-Edit

|

Qwen

| null | 75,516

| 75,516

|

False

| 2025-08-17T08:32:01Z

| 2025-08-25T04:41:11Z

|

diffusers

| 1,545

| 359

| null |

image-to-image

| null |

[

".gitattributes",

"README.md",

"model_index.json",

"processor/added_tokens.json",

"processor/chat_template.jinja",

"processor/merges.txt",

"processor/preprocessor_config.json",

"processor/special_tokens_map.json",

"processor/tokenizer.json",

"processor/tokenizer_config.json",

"processor/video_preprocessor_config.json",

"processor/vocab.json",

"scheduler/scheduler_config.json",

"text_encoder/config.json",

"text_encoder/generation_config.json",

"text_encoder/model-00001-of-00004.safetensors",

"text_encoder/model-00002-of-00004.safetensors",

"text_encoder/model-00003-of-00004.safetensors",

"text_encoder/model-00004-of-00004.safetensors",

"text_encoder/model.safetensors.index.json",

"tokenizer/added_tokens.json",

"tokenizer/chat_template.jinja",

"tokenizer/merges.txt",

"tokenizer/special_tokens_map.json",

"tokenizer/tokenizer_config.json",

"tokenizer/vocab.json",

"transformer/config.json",

"transformer/diffusion_pytorch_model-00001-of-00009.safetensors",

"transformer/diffusion_pytorch_model-00002-of-00009.safetensors",

"transformer/diffusion_pytorch_model-00003-of-00009.safetensors",

"transformer/diffusion_pytorch_model-00004-of-00009.safetensors",

"transformer/diffusion_pytorch_model-00005-of-00009.safetensors",

"transformer/diffusion_pytorch_model-00006-of-00009.safetensors",

"transformer/diffusion_pytorch_model-00007-of-00009.safetensors",

"transformer/diffusion_pytorch_model-00008-of-00009.safetensors",

"transformer/diffusion_pytorch_model-00009-of-00009.safetensors",

"transformer/diffusion_pytorch_model.safetensors.index.json",

"vae/config.json",

"vae/diffusion_pytorch_model.safetensors"

] |

[

1580,

11747,

512,

605,

1017,

1671853,

788,

613,

11421896,

4727,

904,

2776833,

485,

3217,

244,

4968243304,

4991495816,

4932751040,

1691924384,

57655,

605,

2427,

1671853,

613,

4686,

3383407,

339,

4989364312,

4984214160,

4946470000,

4984213736,

4946471896,

4946451560,

4908690520,

4984232856,

1170918840,

198887,

730,

253806966

] | 57,720,467,613

|

ac7f9318f633fc4b5778c59367c8128225f1e3de

|

[

"diffusers",

"safetensors",

"image-to-image",

"en",

"zh",

"arxiv:2508.02324",

"license:apache-2.0",

"diffusers:QwenImageEditPipeline",

"region:us"

] | null |

<p align="center">

<img src="https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-Image/qwen_image_edit_logo.png" width="400"/>

<p>

<p align="center">

💜 <a href="https://chat.qwen.ai/"><b>Qwen Chat</b></a>   |   🤗 <a href="https://huggingface.co/Qwen/Qwen-Image-Edit">Hugging Face</a>   |   🤖 <a href="https://modelscope.cn/models/Qwen/Qwen-Image-Edit">ModelScope</a>   |    📑 <a href="https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-Image/Qwen_Image.pdf">Tech Report</a>    |    📑 <a href="https://qwenlm.github.io/blog/qwen-image-edit/">Blog</a>

<br>

🖥️ <a href="https://huggingface.co/spaces/Qwen/Qwen-Image-Edit">Demo</a>   |   💬 <a href="https://github.com/QwenLM/Qwen-Image/blob/main/assets/wechat.png">WeChat (微信)</a>   |   🫨 <a href="https://discord.gg/CV4E9rpNSD">Discord</a>  |    <a href="https://github.com/QwenLM/Qwen-Image">Github</a>

</p>

<p align="center">

<img src="https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-Image/edit_homepage.jpg" width="1600"/>

<p>

# Introduction

We are excited to introduce Qwen-Image-Edit, the image editing version of Qwen-Image. Built upon our 20B Qwen-Image model, Qwen-Image-Edit successfully extends Qwen-Image’s unique text rendering capabilities to image editing tasks, enabling precise text editing. Furthermore, Qwen-Image-Edit simultaneously feeds the input image into Qwen2.5-VL (for visual semantic control) and the VAE Encoder (for visual appearance control), achieving capabilities in both semantic and appearance editing. To experience the latest model, visit [Qwen Chat](https://qwen.ai) and select the "Image Editing" feature.

Key Features:

* **Semantic and Appearance Editing**: Qwen-Image-Edit supports both low-level visual appearance editing (such as adding, removing, or modifying elements, requiring all other regions of the image to remain completely unchanged) and high-level visual semantic editing (such as IP creation, object rotation, and style transfer, allowing overall pixel changes while maintaining semantic consistency).

* **Precise Text Editing**: Qwen-Image-Edit supports bilingual (Chinese and English) text editing, allowing direct addition, deletion, and modification of text in images while preserving the original font, size, and style.

* **Strong Benchmark Performance**: Evaluations on multiple public benchmarks demonstrate that Qwen-Image-Edit achieves state-of-the-art (SOTA) performance in image editing tasks, establishing it as a powerful foundation model for image editing.

## Quick Start

Install the latest version of diffusers

```

pip install git+https://github.com/huggingface/diffusers

```

The following contains a code snippet illustrating how to use the model to generate images based on text prompts:

```python

import os

from PIL import Image

import torch

from diffusers import QwenImageEditPipeline

pipeline = QwenImageEditPipeline.from_pretrained("Qwen/Qwen-Image-Edit")

print("pipeline loaded")

pipeline.to(torch.bfloat16)

pipeline.to("cuda")

pipeline.set_progress_bar_config(disable=None)

image = Image.open("./input.png").convert("RGB")

prompt = "Change the rabbit's color to purple, with a flash light background."

inputs = {

"image": image,

"prompt": prompt,

"generator": torch.manual_seed(0),

"true_cfg_scale": 4.0,

"negative_prompt": " ",

"num_inference_steps": 50,

}

with torch.inference_mode():

output = pipeline(**inputs)

output_image = output.images[0]

output_image.save("output_image_edit.png")

print("image saved at", os.path.abspath("output_image_edit.png"))

```

## Showcase

One of the highlights of Qwen-Image-Edit lies in its powerful capabilities for semantic and appearance editing. Semantic editing refers to modifying image content while preserving the original visual semantics. To intuitively demonstrate this capability, let's take Qwen's mascot—Capybara—as an example:

As can be seen, although most pixels in the edited image differ from those in the input image (the leftmost image), the character consistency of Capybara is perfectly preserved. Qwen-Image-Edit's powerful semantic editing capability enables effortless and diverse creation of original IP content.

Furthermore, on Qwen Chat, we designed a series of editing prompts centered around the 16 MBTI personality types. Leveraging these prompts, we successfully created a set of MBTI-themed emoji packs based on our mascot Capybara, effortlessly expanding the IP's reach and expression.

Moreover, novel view synthesis is another key application scenario in semantic editing. As shown in the two example images below, Qwen-Image-Edit can not only rotate objects by 90 degrees, but also perform a full 180-degree rotation, allowing us to directly see the back side of the object:

Another typical application of semantic editing is style transfer. For instance, given an input portrait, Qwen-Image-Edit can easily transform it into various artistic styles such as Studio Ghibli. This capability holds significant value in applications like virtual avatar creation:

In addition to semantic editing, appearance editing is another common image editing requirement. Appearance editing emphasizes keeping certain regions of the image completely unchanged while adding, removing, or modifying specific elements. The image below illustrates a case where a signboard is added to the scene.

As shown, Qwen-Image-Edit not only successfully inserts the signboard but also generates a corresponding reflection, demonstrating exceptional attention to detail.

Below is another interesting example, demonstrating how to remove fine hair strands and other small objects from an image.

Additionally, the color of a specific letter "n" in the image can be modified to blue, enabling precise editing of particular elements.

Appearance editing also has wide-ranging applications in scenarios such as adjusting a person's background or changing clothing. The three images below demonstrate these practical use cases respectively.

Another standout feature of Qwen-Image-Edit is its accurate text editing capability, which stems from Qwen-Image's deep expertise in text rendering. As shown below, the following two cases vividly demonstrate Qwen-Image-Edit's powerful performance in editing English text:

Qwen-Image-Edit can also directly edit Chinese posters, enabling not only modifications to large headline text but also precise adjustments to even small and intricate text elements.

Finally, let's walk through a concrete image editing example to demonstrate how to use a chained editing approach to progressively correct errors in a calligraphy artwork generated by Qwen-Image:

In this artwork, several Chinese characters contain generation errors. We can leverage Qwen-Image-Edit to correct them step by step. For instance, we can draw bounding boxes on the original image to mark the regions that need correction, instructing Qwen-Image-Edit to fix these specific areas. Here, we want the character "稽" to be correctly written within the red box, and the character "亭" to be accurately rendered in the blue region.

However, in practice, the character "稽" is relatively obscure, and the model fails to correct it correctly in one step. The lower-right component of "稽" should be "旨" rather than "日". At this point, we can further highlight the "日" portion with a red box, instructing Qwen-Image-Edit to fine-tune this detail and replace it with "旨".

Isn't it amazing? With this chained, step-by-step editing approach, we can continuously correct character errors until the desired final result is achieved.

Finally, we have successfully obtained a completely correct calligraphy version of *Lantingji Xu (Orchid Pavilion Preface)*!

In summary, we hope that Qwen-Image-Edit can further advance the field of image generation, truly lower the technical barriers to visual content creation, and inspire even more innovative applications.

## License Agreement

Qwen-Image is licensed under Apache 2.0.

## Citation

We kindly encourage citation of our work if you find it useful.

```bibtex

@misc{wu2025qwenimagetechnicalreport,

title={Qwen-Image Technical Report},

author={Chenfei Wu and Jiahao Li and Jingren Zhou and Junyang Lin and Kaiyuan Gao and Kun Yan and Sheng-ming Yin and Shuai Bai and Xiao Xu and Yilei Chen and Yuxiang Chen and Zecheng Tang and Zekai Zhang and Zhengyi Wang and An Yang and Bowen Yu and Chen Cheng and Dayiheng Liu and Deqing Li and Hang Zhang and Hao Meng and Hu Wei and Jingyuan Ni and Kai Chen and Kuan Cao and Liang Peng and Lin Qu and Minggang Wu and Peng Wang and Shuting Yu and Tingkun Wen and Wensen Feng and Xiaoxiao Xu and Yi Wang and Yichang Zhang and Yongqiang Zhu and Yujia Wu and Yuxuan Cai and Zenan Liu},

year={2025},

eprint={2508.02324},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2508.02324},

}

```

## Join Us

If you're passionate about fundamental research, we're hiring full-time employees (FTEs) and research interns. Don't wait — reach out to us at [email protected]

|

[

"multimodalart/Qwen-Image-Edit-Fast",

"Qwen/Qwen-Image-Edit",

"zerogpu-aoti/Qwen-Image-Edit-Relight",

"zerogpu-aoti/Qwen-Image-Edit-Outpaint",

"llamameta/nano-banana-experimental",

"zerogpu-aoti/Qwen-Image-Edit-Multi-Image",

"bep40/Nano-Banana",

"LPX55/Qwen-Image-Edit_Fast-Presets",

"VirtualKimi/Nano-Banana",

"ginigen/Nano-Banana-PRO",

"reallifeadi/Qwen-Qwen-Image-Edit",

"aiqtech/kofaceid",

"wavespeed/qwen-edit-image",

"zerogpu-aoti/Qwen-Image-Edit-aot-dynamic-fa3-fix-cfg",

"nazdridoy/inferoxy-hub",

"RAMASocute/Qwen-Qwen-Image-Edit",

"umint/o4-mini",

"xbai4680/sdsadsad",

"wavespeed/Qwen-Image-Edit",

"dangthr/Qwen-Image-Edit",

"TopGeneralDeng/Qwen-Qwen-Image-Edit",

"jacobcrowww/Qwen-Qwen-Image-Edit",

"cku9790/Qwen-Qwen-Image-Edit",

"TerrenceY/Qwen-Qwen-Image-Edit",

"JonathanZouari/Qwen-Qwen-Image-Edit",

"hassan1x/Qwen-Qwen-Image-Edit",

"LLMhacker/Qwen-Image-Edit-Fast",

"affgg/Qwen-Qwen-Image-Edit",

"SinniDcat/Qwen-Qwen-Image-Edit",

"jalhaq82/Qwen-Qwen-Image-Edit",

"LLMhacker/Qwen-Image-Edit",

"fengxingwei/Qwen-Qwen-Image-Edit",

"rectmedia/Qwen-Qwen-Image-Edit",

"ReallyFloppyPenguin/Qwen-Qwen-Image-Edit",

"adrawn/Qwen-Qwen-Image-Edit",

"VirtualKimi/Qwen-Image-Edit-Fast",

"MindCraft24729/Qwen-Image-Edit",

"jinwu76/Qwen-Qwen-Image-Edit",

"Muyumba/Qwen-Qwen-Image-Edit",

"FanArtFuseBeads/Qwen-Qwen-Image-Edit",

"qwer555/Qwen-Qwen-Image-Edit",

"DarwinPRR/Qwen-Qwen-Image-Edit",

"baicy/Qwen-Qwen-Image-Edit",

"sununy/ff",

"mrbui1990/Qwen-Image-Edit-Fast",

"AbdelhamedJr/Qwen-Qwen-Image-Edit",

"t3llo/Qwen-Qwen-Image-Edit",

"Vutony/Qwen-Qwen-Image-Edit",

"Usbebdhndejkss/Qwen-Qwen-Image-Edit",

"HumorBuddy/Qwen-Qwen-Image-Edit",

"racerx916/Qwen-Qwen-Image-Edit",

"WasabiPLP/Qwen-Qwen-Image-Edit",

"rohanmiriyala/Qwen-Qwen-Image-Edit",

"R127/Qwen-Qwen-Image-Edit",

"xiaowuzi/Qwen-Qwen-Image-Edit",

"ackpro789/Qwen-Qwen-Image-Edit",

"Gvqlo10c/Qwen-Qwen-Image-Edit",

"Mehdidib/Qwen-Qwen-Image-Edit",

"felipk/Qwen-Qwen-Image-Edit",

"fearslayer45/Qwen-Qwen-Image-Edit",

"gptken/Qwen-Qwen-Image-Edit",

"miangusapa/Qwen-Qwen-Image-Edit",

"tchung1970/Qwen-Image-Edit",

"alis9974/Qwen-Image-Edit2",

"cssddnnc/Qwen-Qwen-Image-Edit",

"aichimaodeyu/Qwen-Qwen-Image-Edit",

"MohanaDeepan/Qwen-Qwen-Image-Edit",

"Vigesvikes/Qwen-Qwen-Image-Edit",

"cbensimon/Qwen-Image-Edit-aot-dynamic-fa3",

"ASHWINI66929/Qwen-Qwen-Image-Edit",

"burtenshaw/Qwen-Image-Edit-MCP",

"itdog-max/Qwen-Qwen-Image-Edit",

"wakozee/Qwen-Qwen-Image-Edit",

"Sudharsannn/Qwen-Qwen-Image-Edit",

"kkvipvip/Qwen-Qwen-Image-Edit",

"stealthify/nano-banana-exp-image-edit",

"silvanin/Qwen-Qwen-Image-Edit",

"yuxingxing/Qwen-Qwen-Image-Edit",

"mgbam/yeye",

"Falln87/Qwen_Image_Suite",

"Margh0330/Qwen-Qwen-Image-Edit",

"einarhre/viswiz",

"Idusha/Qwen-Qwen-Image-Edit",

"rahulxcr/Qwen-Image-Edit",

"sunny1997/Qwen-Image-Edit-Fast",

"pmau45/Qwen-Qwen-Image-Edit",

"datxy/Qwen-Image-Edit-Fast",

"VegaLing/Vega-Qwen-Qwen-Image-Edit",

"inggaro/Qwen-Qwen-Image-Edit",

"dlschad/Qwen-Qwen-Image-Edit",

"zzhc/Qwen-Qwen-Image-Edit",

"Love680/Qwen-Qwen-Image-Edit",

"arturono/Qwen-Qwen-Image-Edit",

"umint/gpt-4.1-nano",

"umint/o3",

"Rahul-KJS/Qwen-Qwen-Image-Edit",

"Framill/Qwen-Qwen-Image-Edit",

"Nvra/Qwen-Qwen-Image-Edit",

"Avinashthehulk/Qwen-Qwen-Image-Edit",

"pistonX/Qwen-Qwen-Image-Edit",

"sormunir/Qwen-Qwen-Image-Edit",

"bep40/Qwen-Image-Edit-Multi-Image",

"marie11110/Qwen-Qwen-Image-Edit",

"chengzhigang/Qwen-Image-Edit_Fast-Presets01",

"chengzhigang/Qwen-Image-Edit-Fast-02",

"Rahul-KJS/cartoonize"

] |

[

"apache-2.0"

] | null |

[

"en",

"zh"

] | null | null |

[

"image-to-image"

] | null | null |

[

"vision"

] |

[

"image"

] |

[

"image"

] |

team

|

company

|

[

"China"

] | null | null | null | null | null | null | null | null | null |

68abccbf1935e46075b39df2

|

Wan-AI/Wan2.2-S2V-14B

|

Wan-AI

| null | 9,959

| 9,959

|

False

| 2025-08-25T02:38:55Z

| 2025-08-28T02:36:24Z

|

diffusers

| 197

| 197

| null | null | null |

[

".gitattributes",

"README.md",

"Wan2.1_VAE.pth",

"assets/471504690-b63bfa58-d5d7-4de6-a1a2-98970b06d9a7.mp4",

"assets/comp_effic.png",

"assets/logo.png",

"assets/moe_2.png",

"assets/moe_arch.png",

"assets/performance.png",

"assets/vae.png",

"config.json",

"configuration.json",

"diffusion_pytorch_model-00001-of-00004.safetensors",

"diffusion_pytorch_model-00002-of-00004.safetensors",

"diffusion_pytorch_model-00003-of-00004.safetensors",

"diffusion_pytorch_model-00004-of-00004.safetensors",

"diffusion_pytorch_model.safetensors.index.json",

"google/umt5-xxl/special_tokens_map.json",

"google/umt5-xxl/spiece.model",

"google/umt5-xxl/tokenizer.json",

"google/umt5-xxl/tokenizer_config.json",

"models_t5_umt5-xxl-enc-bf16.pth",

"wav2vec2-large-xlsr-53-english/.msc",

"wav2vec2-large-xlsr-53-english/.mv",

"wav2vec2-large-xlsr-53-english/README.md",

"wav2vec2-large-xlsr-53-english/alphabet.json",

"wav2vec2-large-xlsr-53-english/config.json",

"wav2vec2-large-xlsr-53-english/configuration.json",

"wav2vec2-large-xlsr-53-english/eval.py",

"wav2vec2-large-xlsr-53-english/flax_model.msgpack",

"wav2vec2-large-xlsr-53-english/full_eval.sh",

"wav2vec2-large-xlsr-53-english/language_model/attrs.json",

"wav2vec2-large-xlsr-53-english/language_model/lm.binary",

"wav2vec2-large-xlsr-53-english/language_model/unigrams.txt",

"wav2vec2-large-xlsr-53-english/log_mozilla-foundation_common_voice_6_0_en_test_predictions.txt",

"wav2vec2-large-xlsr-53-english/log_mozilla-foundation_common_voice_6_0_en_test_predictions_greedy.txt",

"wav2vec2-large-xlsr-53-english/log_mozilla-foundation_common_voice_6_0_en_test_targets.txt",

"wav2vec2-large-xlsr-53-english/log_speech-recognition-community-v2_dev_data_en_validation_predictions.txt",

"wav2vec2-large-xlsr-53-english/log_speech-recognition-community-v2_dev_data_en_validation_predictions_greedy.txt",

"wav2vec2-large-xlsr-53-english/log_speech-recognition-community-v2_dev_data_en_validation_targets.txt",

"wav2vec2-large-xlsr-53-english/model.safetensors",

"wav2vec2-large-xlsr-53-english/mozilla-foundation_common_voice_6_0_en_test_eval_results.txt",

"wav2vec2-large-xlsr-53-english/mozilla-foundation_common_voice_6_0_en_test_eval_results_greedy.txt",

"wav2vec2-large-xlsr-53-english/preprocessor_config.json",

"wav2vec2-large-xlsr-53-english/pytorch_model.bin",

"wav2vec2-large-xlsr-53-english/special_tokens_map.json",

"wav2vec2-large-xlsr-53-english/speech-recognition-community-v2_dev_data_en_validation_eval_results.txt",

"wav2vec2-large-xlsr-53-english/speech-recognition-community-v2_dev_data_en_validation_eval_results_greedy.txt",

"wav2vec2-large-xlsr-53-english/vocab.json"

] |

[

1300,

18697,

507609880,

9193286,

202156,

56322,

527914,

74900,

306535,

165486,

890,

43,

9968229352,

9891539248,

9956985634,

2774887624,

113150,

6623,

4548313,

16837417,

61728,

11361920418,

2328,

36,

5327,

200,

1531,

86,

6198,

1261905572,

1372,

78,

862913451,

3509871,

924339,

925177,

932146,

130354,

130796,

131489,

1261942732,

48,

49,

262,

1262069143,

85,

48,

49,

300

] | 49,148,819,983

|

eff0178482d4d6e1fed7763f6c3b3f480be908c0

|

[

"diffusers",

"safetensors",

"s2v",

"arxiv:2503.20314",

"arxiv:2508.18621",

"license:apache-2.0",

"region:us"

] | null |

# Wan2.2

<p align="center">

<img src="assets/logo.png" width="400"/>

<p>

<p align="center">

💜 <a href="https://wan.video"><b>Wan</b></a>    |    🖥️ <a href="https://github.com/Wan-Video/Wan2.2">GitHub</a>    |   🤗 <a href="https://huggingface.co/Wan-AI/">Hugging Face</a>   |   🤖 <a href="https://modelscope.cn/organization/Wan-AI">ModelScope</a>   |    📑 <a href="https://arxiv.org/abs/2503.20314">Paper</a>    |    📑 <a href="https://wan.video/welcome?spm=a2ty_o02.30011076.0.0.6c9ee41eCcluqg">Blog</a>    |    💬 <a href="https://discord.gg/AKNgpMK4Yj">Discord</a>

<br>

📕 <a href="https://alidocs.dingtalk.com/i/nodes/jb9Y4gmKWrx9eo4dCql9LlbYJGXn6lpz">使用指南(中文)</a>   |    📘 <a href="https://alidocs.dingtalk.com/i/nodes/EpGBa2Lm8aZxe5myC99MelA2WgN7R35y">User Guide(English)</a>   |   💬 <a href="https://gw.alicdn.com/imgextra/i2/O1CN01tqjWFi1ByuyehkTSB_!!6000000000015-0-tps-611-1279.jpg">WeChat(微信)</a>

<br>

-----

[**Wan: Open and Advanced Large-Scale Video Generative Models**](https://arxiv.org/abs/2503.20314) <be>

We are excited to introduce **Wan2.2**, a major upgrade to our foundational video models. With **Wan2.2**, we have focused on incorporating the following innovations:

- 👍 **Effective MoE Architecture**: Wan2.2 introduces a Mixture-of-Experts (MoE) architecture into video diffusion models. By separating the denoising process cross timesteps with specialized powerful expert models, this enlarges the overall model capacity while maintaining the same computational cost.

- 👍 **Cinematic-level Aesthetics**: Wan2.2 incorporates meticulously curated aesthetic data, complete with detailed labels for lighting, composition, contrast, color tone, and more. This allows for more precise and controllable cinematic style generation, facilitating the creation of videos with customizable aesthetic preferences.

- 👍 **Complex Motion Generation**: Compared to Wan2.1, Wan2.2 is trained on a significantly larger data, with +65.6% more images and +83.2% more videos. This expansion notably enhances the model's generalization across multiple dimensions such as motions, semantics, and aesthetics, achieving TOP performance among all open-sourced and closed-sourced models.

- 👍 **Efficient High-Definition Hybrid TI2V**: Wan2.2 open-sources a 5B model built with our advanced Wan2.2-VAE that achieves a compression ratio of **16×16×4**. This model supports both text-to-video and image-to-video generation at 720P resolution with 24fps and can also run on consumer-grade graphics cards like 4090. It is one of the fastest **720P@24fps** models currently available, capable of serving both the industrial and academic sectors simultaneously.

## Video Demos

<div align="center">

<video width="80%" controls>

<source src="https://cloud.video.taobao.com/vod/4szTT1B0LqXvJzmuEURfGRA-nllnqN_G2AT0ZWkQXoQ.mp4" type="video/mp4">

Your browser does not support the video tag.

</video>

</div>

## 🔥 Latest News!!

* Aug 26, 2025: 🎵 We introduce **[Wan2.2-S2V-14B](https://humanaigc.github.io/wan-s2v-webpage)**, an audio-driven cinematic video generation model, including [inference code](#run-speech-to-video-generation), [model weights](#model-download), and [technical report](https://humanaigc.github.io/wan-s2v-webpage/content/wan-s2v.pdf)! Now you can try it on [wan.video](https://wan.video/), [ModelScope Gradio](https://www.modelscope.cn/studios/Wan-AI/Wan2.2-S2V) or [HuggingFace Gradio](https://huggingface.co/spaces/Wan-AI/Wan2.2-S2V)!

* Jul 28, 2025: 👋 We have open a [HF space](https://huggingface.co/spaces/Wan-AI/Wan-2.2-5B) using the TI2V-5B model. Enjoy!

* Jul 28, 2025: 👋 Wan2.2 has been integrated into ComfyUI ([CN](https://docs.comfy.org/zh-CN/tutorials/video/wan/wan2_2) | [EN](https://docs.comfy.org/tutorials/video/wan/wan2_2)). Enjoy!

* Jul 28, 2025: 👋 Wan2.2's T2V, I2V and TI2V have been integrated into Diffusers ([T2V-A14B](https://huggingface.co/Wan-AI/Wan2.2-T2V-A14B-Diffusers) | [I2V-A14B](https://huggingface.co/Wan-AI/Wan2.2-I2V-A14B-Diffusers) | [TI2V-5B](https://huggingface.co/Wan-AI/Wan2.2-TI2V-5B-Diffusers)). Feel free to give it a try!

* Jul 28, 2025: 👋 We've released the inference code and model weights of **Wan2.2**.

## Community Works

If your research or project builds upon [**Wan2.1**](https://github.com/Wan-Video/Wan2.1) or [**Wan2.2**](https://github.com/Wan-Video/Wan2.2), and you would like more people to see it, please inform us.

- [DiffSynth-Studio](https://github.com/modelscope/DiffSynth-Studio) provides comprehensive support for Wan 2.2, including low-GPU-memory layer-by-layer offload, FP8 quantization, sequence parallelism, LoRA training, full training.

- [Kijai's ComfyUI WanVideoWrapper](https://github.com/kijai/ComfyUI-WanVideoWrapper) is an alternative implementation of Wan models for ComfyUI. Thanks to its Wan-only focus, it's on the frontline of getting cutting edge optimizations and hot research features, which are often hard to integrate into ComfyUI quickly due to its more rigid structure.

## 📑 Todo List

- Wan2.2-S2V Speech-to-Video

- [x] Inference code of Wan2.2-S2V

- [x] Checkpoints of Wan2.2-S2V-14B

- [ ] ComfyUI integration

- [ ] Diffusers integration

## Run Wan2.2

#### Installation

Clone the repo:

```sh

git clone https://github.com/Wan-Video/Wan2.2.git

cd Wan2.2

```

Install dependencies:

```sh

# Ensure torch >= 2.4.0

# If the installation of `flash_attn` fails, try installing the other packages first and install `flash_attn` last

pip install -r requirements.txt

```

#### Model Download

| Models | Download Links | Description |

|--------------------|---------------------------------------------------------------------------------------------------------------------------------------------|-------------|

| T2V-A14B | 🤗 [Huggingface](https://huggingface.co/Wan-AI/Wan2.2-T2V-A14B) 🤖 [ModelScope](https://modelscope.cn/models/Wan-AI/Wan2.2-T2V-A14B) | Text-to-Video MoE model, supports 480P & 720P |

| I2V-A14B | 🤗 [Huggingface](https://huggingface.co/Wan-AI/Wan2.2-I2V-A14B) 🤖 [ModelScope](https://modelscope.cn/models/Wan-AI/Wan2.2-I2V-A14B) | Image-to-Video MoE model, supports 480P & 720P |

| TI2V-5B | 🤗 [Huggingface](https://huggingface.co/Wan-AI/Wan2.2-TI2V-5B) 🤖 [ModelScope](https://modelscope.cn/models/Wan-AI/Wan2.2-TI2V-5B) | High-compression VAE, T2V+I2V, supports 720P |

| S2V-14B | 🤗 [Huggingface](https://huggingface.co/Wan-AI/Wan2.2-S2V-14B) 🤖 [ModelScope](https://modelscope.cn/models/Wan-AI/Wan2.2-S2V-14B) | Speech-to-Video model, supports 480P & 720P |

Download models using huggingface-cli:

``` sh

pip install "huggingface_hub[cli]"

huggingface-cli download Wan-AI/Wan2.2-S2V-14B --local-dir ./Wan2.2-S2V-14B

```

Download models using modelscope-cli:

``` sh

pip install modelscope

modelscope download Wan-AI/Wan2.2-S2V-14B --local_dir ./Wan2.2-S2V-14B

```

#### Run Speech-to-Video Generation

This repository supports the `Wan2.2-S2V-14B` Speech-to-Video model and can simultaneously support video generation at 480P and 720P resolutions.

- Single-GPU Speech-to-Video inference

```sh

python generate.py --task s2v-14B --size 1024*704 --ckpt_dir ./Wan2.2-S2V-14B/ --offload_model True --convert_model_dtype --prompt "Summer beach vacation style, a white cat wearing sunglasses sits on a surfboard." --image "examples/i2v_input.JPG" --audio "examples/talk.wav"

# Without setting --num_clip, the generated video length will automatically adjust based on the input audio length

```

> 💡 This command can run on a GPU with at least 80GB VRAM.

- Multi-GPU inference using FSDP + DeepSpeed Ulysses

```sh

torchrun --nproc_per_node=8 generate.py --task s2v-14B --size 1024*704 --ckpt_dir ./Wan2.2-S2V-14B/ --dit_fsdp --t5_fsdp --ulysses_size 8 --prompt "Summer beach vacation style, a white cat wearing sunglasses sits on a surfboard." --image "examples/i2v_input.JPG" --audio "examples/talk.wav"

```

- Pose + Audio driven generation

```sh

torchrun --nproc_per_node=8 generate.py --task s2v-14B --size 1024*704 --ckpt_dir ./Wan2.2-S2V-14B/ --dit_fsdp --t5_fsdp --ulysses_size 8 --prompt "a person is singing" --image "examples/pose.png" --audio "examples/sing.MP3" --pose_video "./examples/pose.mp4"

```

> 💡For the Speech-to-Video task, the `size` parameter represents the area of the generated video, with the aspect ratio following that of the original input image.

> 💡The model can generate videos from audio input combined with reference image and optional text prompt.

> 💡The `--pose_video` parameter enables pose-driven generation, allowing the model to follow specific pose sequences while generating videos synchronized with audio input.

> 💡The `--num_clip` parameter controls the number of video clips generated, useful for quick preview with shorter generation time.

## Computational Efficiency on Different GPUs

We test the computational efficiency of different **Wan2.2** models on different GPUs in the following table. The results are presented in the format: **Total time (s) / peak GPU memory (GB)**.

<div align="center">

<img src="assets/comp_effic.png" alt="" style="width: 80%;" />

</div>

> The parameter settings for the tests presented in this table are as follows:

> (1) Multi-GPU: 14B: `--ulysses_size 4/8 --dit_fsdp --t5_fsdp`, 5B: `--ulysses_size 4/8 --offload_model True --convert_model_dtype --t5_cpu`; Single-GPU: 14B: `--offload_model True --convert_model_dtype`, 5B: `--offload_model True --convert_model_dtype --t5_cpu`

(--convert_model_dtype converts model parameter types to config.param_dtype);

> (2) The distributed testing utilizes the built-in FSDP and Ulysses implementations, with FlashAttention3 deployed on Hopper architecture GPUs;

> (3) Tests were run without the `--use_prompt_extend` flag;

> (4) Reported results are the average of multiple samples taken after the warm-up phase.

-------

## Introduction of Wan2.2

**Wan2.2** builds on the foundation of Wan2.1 with notable improvements in generation quality and model capability. This upgrade is driven by a series of key technical innovations, mainly including the Mixture-of-Experts (MoE) architecture, upgraded training data, and high-compression video generation.

##### (1) Mixture-of-Experts (MoE) Architecture

Wan2.2 introduces Mixture-of-Experts (MoE) architecture into the video generation diffusion model. MoE has been widely validated in large language models as an efficient approach to increase total model parameters while keeping inference cost nearly unchanged. In Wan2.2, the A14B model series adopts a two-expert design tailored to the denoising process of diffusion models: a high-noise expert for the early stages, focusing on overall layout; and a low-noise expert for the later stages, refining video details. Each expert model has about 14B parameters, resulting in a total of 27B parameters but only 14B active parameters per step, keeping inference computation and GPU memory nearly unchanged.

<div align="center">

<img src="assets/moe_arch.png" alt="" style="width: 90%;" />

</div>

The transition point between the two experts is determined by the signal-to-noise ratio (SNR), a metric that decreases monotonically as the denoising step $t$ increases. At the beginning of the denoising process, $t$ is large and the noise level is high, so the SNR is at its minimum, denoted as ${SNR}_{min}$. In this stage, the high-noise expert is activated. We define a threshold step ${t}_{moe}$ corresponding to half of the ${SNR}_{min}$, and switch to the low-noise expert when $t<{t}_{moe}$.

<div align="center">

<img src="assets/moe_2.png" alt="" style="width: 90%;" />

</div>

To validate the effectiveness of the MoE architecture, four settings are compared based on their validation loss curves. The baseline **Wan2.1** model does not employ the MoE architecture. Among the MoE-based variants, the **Wan2.1 & High-Noise Expert** reuses the Wan2.1 model as the low-noise expert while uses the Wan2.2's high-noise expert, while the **Wan2.1 & Low-Noise Expert** uses Wan2.1 as the high-noise expert and employ the Wan2.2's low-noise expert. The **Wan2.2 (MoE)** (our final version) achieves the lowest validation loss, indicating that its generated video distribution is closest to ground-truth and exhibits superior convergence.

##### (2) Efficient High-Definition Hybrid TI2V

To enable more efficient deployment, Wan2.2 also explores a high-compression design. In addition to the 27B MoE models, a 5B dense model, i.e., TI2V-5B, is released. It is supported by a high-compression Wan2.2-VAE, which achieves a $T\times H\times W$ compression ratio of $4\times16\times16$, increasing the overall compression rate to 64 while maintaining high-quality video reconstruction. With an additional patchification layer, the total compression ratio of TI2V-5B reaches $4\times32\times32$. Without specific optimization, TI2V-5B can generate a 5-second 720P video in under 9 minutes on a single consumer-grade GPU, ranking among the fastest 720P@24fps video generation models. This model also natively supports both text-to-video and image-to-video tasks within a single unified framework, covering both academic research and practical applications.

<div align="center">

<img src="assets/vae.png" alt="" style="width: 80%;" />

</div>

##### Comparisons to SOTAs

We compared Wan2.2 with leading closed-source commercial models on our new Wan-Bench 2.0, evaluating performance across multiple crucial dimensions. The results demonstrate that Wan2.2 achieves superior performance compared to these leading models.

<div align="center">

<img src="assets/performance.png" alt="" style="width: 90%;" />

</div>

## Citation

If you find our work helpful, please cite us.

```

@article{wan2025,

title={Wan: Open and Advanced Large-Scale Video Generative Models},

author={Team Wan and Ang Wang and Baole Ai and Bin Wen and Chaojie Mao and Chen-Wei Xie and Di Chen and Feiwu Yu and Haiming Zhao and Jianxiao Yang and Jianyuan Zeng and Jiayu Wang and Jingfeng Zhang and Jingren Zhou and Jinkai Wang and Jixuan Chen and Kai Zhu and Kang Zhao and Keyu Yan and Lianghua Huang and Mengyang Feng and Ningyi Zhang and Pandeng Li and Pingyu Wu and Ruihang Chu and Ruili Feng and Shiwei Zhang and Siyang Sun and Tao Fang and Tianxing Wang and Tianyi Gui and Tingyu Weng and Tong Shen and Wei Lin and Wei Wang and Wei Wang and Wenmeng Zhou and Wente Wang and Wenting Shen and Wenyuan Yu and Xianzhong Shi and Xiaoming Huang and Xin Xu and Yan Kou and Yangyu Lv and Yifei Li and Yijing Liu and Yiming Wang and Yingya Zhang and Yitong Huang and Yong Li and You Wu and Yu Liu and Yulin Pan and Yun Zheng and Yuntao Hong and Yupeng Shi and Yutong Feng and Zeyinzi Jiang and Zhen Han and Zhi-Fan Wu and Ziyu Liu},

journal = {arXiv preprint arXiv:2503.20314},

year={2025}

}

@article{wan2025s2v,

title={Wan-S2V:Audio-Driven Cinematic Video Generation},

author={Xin Gao, Li Hu, Siqi Hu, Mingyang Huang, Chaonan Ji, Dechao Meng, Jinwei Qi, Penchong Qiao, Zhen Shen, Yafei Song, Ke Sun, Linrui Tian, Guangyuan Wang, Qi Wang, Zhongjian Wang, Jiayu Xiao, Sheng Xu, Bang Zhang, Peng Zhang, Xindi Zhang, Zhe Zhang, Jingren Zhou, Lian Zhuo},

journal={arXiv preprint arXiv:2508.18621},

year={2025}

}

```

## License Agreement

The models in this repository are licensed under the Apache 2.0 License. We claim no rights over the your generated contents, granting you the freedom to use them while ensuring that your usage complies with the provisions of this license. You are fully accountable for your use of the models, which must not involve sharing any content that violates applicable laws, causes harm to individuals or groups, disseminates personal information intended for harm, spreads misinformation, or targets vulnerable populations. For a complete list of restrictions and details regarding your rights, please refer to the full text of the [license](LICENSE.txt).

## Acknowledgements

We would like to thank the contributors to the [SD3](https://huggingface.co/stabilityai/stable-diffusion-3-medium), [Qwen](https://huggingface.co/Qwen), [umt5-xxl](https://huggingface.co/google/umt5-xxl), [diffusers](https://github.com/huggingface/diffusers) and [HuggingFace](https://huggingface.co) repositories, for their open research.

## Contact Us

If you would like to leave a message to our research or product teams, feel free to join our [Discord](https://discord.gg/AKNgpMK4Yj) or [WeChat groups](https://gw.alicdn.com/imgextra/i2/O1CN01tqjWFi1ByuyehkTSB_!!6000000000015-0-tps-611-1279.jpg)!

|

[

"Wan-AI/Wan2.2-S2V",

"mjinabq/Wan2.2-S2V",

"opparco/Wan2.2-S2V",

"ItsMpilo/Wan2.2-S2V"

] |

[

"apache-2.0"

] | null | null | null | null | null | null |

[

"s2v"

] | null | null | null |

free

|

company

|

[

"China"

] | null | null | null | null | null | null | null | null | null |

688a4ad0a0c7bbd72715e857

|

Phr00t/WAN2.2-14B-Rapid-AllInOne

|

Phr00t

|

{

"models": [

{

"_id": "6881e60ffcffaee6d84fe9e4",

"id": "Wan-AI/Wan2.2-I2V-A14B"

}

],

"relation": "finetune"

}

| 0

| 0

|

False

| 2025-07-30T16:39:44Z

| 2025-08-23T23:51:11Z

|

wan2.2

| 494

| 166

| null |

image-to-video

| null |

[

".gitattributes",

"README.md",

"v2/wan2.2-i2v-aio-v2.safetensors",

"v2/wan2.2-t2v-aio-v2.safetensors",

"v3/wan2.2-i2v-rapid-aio-540p-v3.safetensors",

"v3/wan2.2-i2v-rapid-aio-720p-v3.safetensors",

"v3/wan2.2-t2v-rapid-aio-v3.safetensors",

"v4/wan2.2-i2v-rapid-aio-v4.safetensors",

"v4/wan2.2-t2v-rapid-aio-v4.safetensors",

"v5/wan2.2-i2v-rapid-aio-v5.safetensors",

"v6/.placeholder",

"v6/wan2.2-i2v-rapid-aio-v6.safetensors",

"v6/wan2.2-t2v-rapid-aio-v6.safetensors",